Recently I had a huge task – improve ETL process between MS SQL servers – to transfer near billion of rows every day in a real time. This means, once a master SQL server receives new rows, the rows must be immediately transferred to 8 satellite MS SQL servers.

The back-end. Every MS SQL server running on one Intel Xeon E5-2690 2.9GHz 8 cores CPU, has 384GB of memory and enterprise SSD storage: separate RAID10 for data, index and log files. TempDB running on RAM drive (in memory). The main database table are separated in file-groups per day.

Before the improvement, I found around up to 800.000 rows per minute through-output. Since the amount of data were increasing every single day, the performance were too slow. By the way, the method to move the rows to satellite servers were to use stored procedure and to transfer the rows in chunks up to 1000000 of rows .

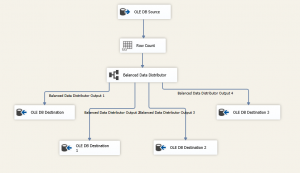

To improve the through-output, the decision were made to use SSIS package instead of USP, since SSIS is probably the best place for ETL processes. And of course to multi-thread the process. So after a lot of tests the best performance we reached using the data flow bellow:

The most important part is Balance Data Distributor. Using the component, the SSIS package were able to write in 4 separate threads into one table. This helped a lot and every additional thread gave additional 500000 of rows per minute through-output (using single thread without the component, SSIS were able to reach up to 1000000 rows per minute). So the total through-output are up to 3 million of rows or 1.5GB of data per minute in the real time from the master MS SQL server to 8 satellite MS SQL servers.

Hope this will help someone to save a lot of time and provides ability to have really fast solution to move huge amount of data between servers, etc. prepared in a short time.

If you need any help on details, leave a comment or write me an email!